Don't Use Frames to Measure Performance

Why does this even matter?

Some time ago, I attended a Game Dev Conference, and during one of the talks, the presenter said something like, "We changed one thing and gained 5 FPS just because of that". At that moment, I thought, "Cool, but how significant was that gain, really?"

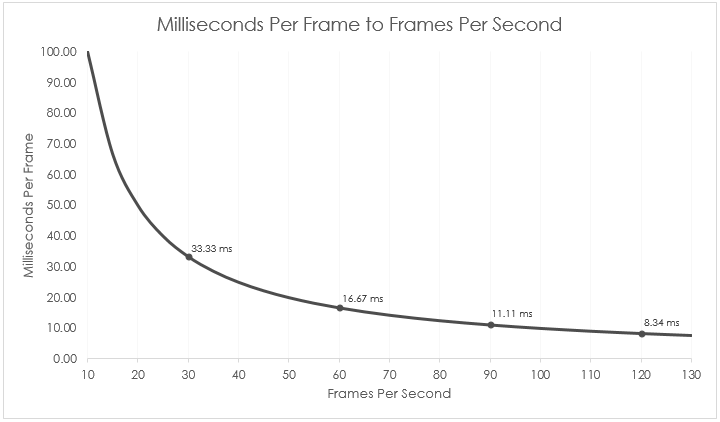

When we talk about performance budgets and using frames per second as unit, it's perfectly fine. For example, saying "We're targeting 60 FPS for our game" is clear and specific. But when someone says they gained a few FPS, it can be confusing, because the impact of that gain isn't always the same. Speeding up from 10 FPS to 15 FPS is a noticeable improvement, while going from 120 to 125FPS may not make much of a difference at all.

Frame Time vs Frame Rate

When we say that our game runs at, for example, 60 FPS, we're talking about Frame Rate, meaning the game is capable of rendering 60 frames per second. From that, we can easily calculate the Frame Time, which tells us how much time the hardware needs to render a single frame to maintain that frame rate. Since this time is very short, it's typically measured in milliseconds (1 ms = 0.001 seconds)

For example:

- If the game has the Frame Rate of 60 FPS then Frame Time is 16.7 ms

- If the game has the Frame Rate of 120 FPS then Frame Time is 8.34 ms

To switch between Frame Time and Frame Rate you can use calculations below:

\[\frac{1000}{fps} = ms\] \[\frac{1000}{ms} = fps\]As you probably noticed, the higher the frame rate, the lower the frame time. This makes perfect sense, if we want to generate more frames within the same amount of time, each frame must be rendered more quickly.

Thus, when someone is saying that some operation gains 20 FPS

- It might be improvement of 66.67 ms if that was between 10 and 30 FPS

- It might be improvement of 1.66ms if that was between 100 and 120 FPS

Without additional context the information is meaningless

Why Frame Time is better to talk about performance?

When we're writing code, what really matters is hitting the frame budget. If someone tells us the taget is 60 FPS, we immediately know we have 16.67 milliseconds per frame to execute everything the game needs to do (rendering, logic, physics, input handling and so on). From this point on, all performance-related measurements are in milliseconds. If we optimize something and save 1 millisecond, it is always 1 millisecond, regardless of the overall frame time. That gain has a fixed, measurable value, which makes it much easier to reason about performance and optimization.

On the other hand, when someone says, "We gained 5 FPS", the impact of that statement is unclear. It could mean significant improvement or something nearly unnoticeable. It all depends on the starting frame rate. Gaining 5 FPS from 20 is a big deal; gaining 5 FPS from 120 is barely perceptible. That's why using frame rate instead of FPS gives much more consistent and meaningful way to evaluate performance improvements.

Summary

- When talking about frame budget, using FPS is perfectly good

- When describing how much a specific operation improved, use milliseconds. This gives your audience an absolute, consistent number that always has the same meaning.

- If you want to mention a gain in FPS, always include context (e.g, from what value to what) to avoid confusion.

Comments powered by Disqus.